Recently, I had a chance to watch major tech events online like Computex, where hardware giants, cloud pioneers, and AI visionaries gather. It wasn’t just the product launches or the sleek presentation that caught my attention. What moved me was something deeper; a glimpse of a new kind of world. A world we are beginning to shape not with bricks or blueprints, but with data and simulation.

That moment triggered something in me. I started reflecting on how civilization itself has evolved, and how we might be standing at the edge of a shift so fundamental, we don’t even have words for it yet.

Let me try.

The Invisible Foundations Beneath Progress

There’s a kind of infrastructure that we only notice once it’s missing. Electricity is like that. We take it for granted until a blackout hits. The same goes for the internet. We don’t think of it as infrastructure, yet it powers nearly everything we touch today.

But now, we’re entering a new stage. One that’s harder to see, but perhaps even more transformative. It’s not about powering homes or transmitting messages. It’s about building intelligence into the world itself.

Electricity made machines possible. The internet made information instant. Now, we are building systems that can reason, simulate, and respond, often faster than humans can. This is the beginning of intelligence as infrastructure.

It sounds abstract, but we’re already living in it. Every time your GPS reroutes in real time, every time an AI models a climate scenario, every time a company creates a digital version of a factory to optimize production, we’re inching closer to this new layer.

Digital Twins and the Logic of Mirroring

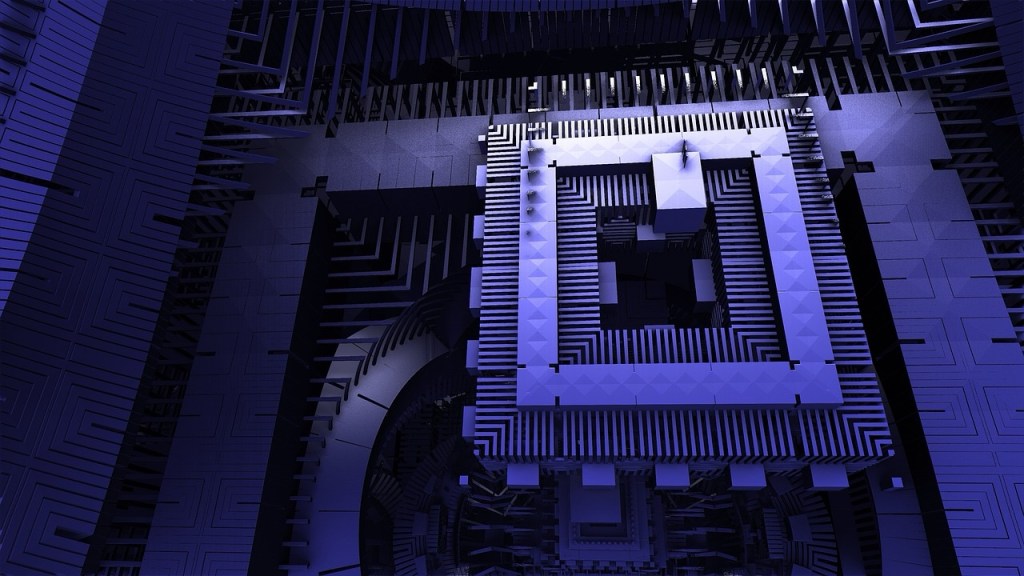

One of the key concepts that resonated with me from the event was the idea of the Digital Twin. It’s a simple idea with profound consequences. A Digital Twin is a virtual model of something physical, like a building, a bridge, a power grid, or even an entire city. But it’s not a static 3D file. It’s dynamic. It changes in real time as its real-world counterpart does.

Imagine a city where you can simulate traffic flows before rush hour. Or a factory that learns from itself every day and adjusts operations to reduce waste and energy. These are not futuristic dreams anymore. They are being built now, quietly, by companies and engineers around the world.

What struck me is that this feels like the real metaverse, not the consumer-facing one filled with avatars and concerts, but a deeper, industrial one. One grounded in precision, not fantasy. We’re not escaping reality; we’re mirroring it, understanding it, and learning how to work with it more wisely.

And this isn’t limited to physical systems. Increasingly, businesses are creating digital twins of their own processes, customer journeys, and even financial flows. The goal isn’t just optimization. It’s the capacity to see, to perceive the structure of reality with enough fidelity that we can improve it without guesswork.

Taiwan’s Quiet Transformation

During the keynote, what impressed me most wasn’t just the technology. It was the geopolitical clarity that came through. Taiwan, long known as a manufacturing hub for the world’s tech giants, is stepping into a new role. The factories are still there, but now something else is emerging.

Large-scale AI data centers. AI factories. Advanced simulation campuses. Massive energy allocations to power intelligence. Taiwan is becoming a kind of simulation capital, not just for Asia, but for the world.

When I heard about the plan to build supercomputers using tens of thousands of GPUs, or about AI training facilities that will one day handle the modeling of entire economies, I felt a shift. This is more than an industrial upgrade. It’s a repositioning.

What used to be a supply chain hub is becoming a reality design hub.

This transformation makes sense. Taiwan already has the hardware, the engineers, the ecosystem. Now, it is beginning to build the infrastructure for simulating intelligence at scale. That shift, to me, is profound.

From Molecules to Metropolises: Simulating Everything

At the same time, there’s another kind of simulation that’s starting to gain momentum; one that doesn’t model factories or traffic, but the very building blocks of nature.

Quantum simulation.

Right now, our ability to simulate chemical reactions is limited by classical computing. The number of variables explodes as complexity increases. But quantum computers, operating under different rules, can model these interactions natively. Even today, we can simulate the behavior of two elements interacting. Soon, we may simulate dozens, then hundreds.

This could change drug development, battery research, material science, and agriculture. Imagine being able to test thousands of chemical interactions without a single lab bench. The entire experimental phase could be moved into a quantum simulation, reducing time, cost, and error.

In essence, we are learning to simulate not just our machines and systems, but life itself; molecules, proteins, energy patterns. The invisible world is becoming computable.

What connects this to Digital Twins is the same philosophical shift: the growing belief that the best way to understand something is to simulate it first. Don’t guess. Don’t experiment blindly. Model it, test it, rehearse it, and then act.

AI Factories: Where Intelligence Is Manufactured

The phrase “AI Factory” sounds poetic, but it’s now a literal thing.

In Taiwan and elsewhere, companies are building massive facilities dedicated not to assembling gadgets, but to training and refining intelligence. These aren’t just data centers. They are sites where vast amounts of information are processed, analyzed, and turned into models that can make decisions, write code, compose music, and simulate futures.

What factories were to the industrial revolution, AI factories are to this one. But instead of producing goods, they produce capabilities.

When paired with Digital Twins, these capabilities can run simulations across industries from logistics and energy to agriculture and transportation. They can test thousands of potential futures and recommend the best paths.

It’s no longer enough to have data. We need interpretation. Judgment. Scenario-building. The AI Factory is where that happens.

And unlike past factories, which needed physical raw materials, these rely on data, compute, and training feedback loops. That’s why countries are racing not just to secure chip supply chains, but to build sovereign AI infrastructure.

A New Epistemology: Beyond Popper’s Boundaries

One more reflection emerged as I thought about all this; one that touches not only technology but the very roots of knowledge itself.

For over a century, the scientific method has stood on a strong foundation articulated by thinkers like Karl Popper. At its heart is falsifiability: a claim must be testable and refutable to be considered scientific. The beauty of this approach lies in its clarity. It guards against superstition, ideology, and vague metaphysics. Science progresses by pruning away what doesn’t hold up under experiment.

But what if our experiments become simulations? What if our knowledge no longer depends on physically falsifying a single hypothesis, but on modeling entire systems across time, space, and probability?

Popper’s framework emerged from an era dominated by reductionism. One variable at a time. Isolate, test, confirm, or refute. This method gave us the steam engine, antibiotics, rockets, and microchips. It was powerful because it was practical. But reality isn’t always isolatable. Climate, ecosystems, economies, and consciousness are full of interdependencies that resist being taken apart.

And now, with AI and quantum simulation, we are approaching problems that are no longer about testing single claims, but about running entire universes of possibility.

This doesn’t make falsifiability obsolete. It simply reveals its limitation. Like Newton giving way to Einstein, we may be entering a post-Popperian paradigm, where scientific progress depends not on what can be disproven, but on what can be simulated, scaled, and observed across dimensions too complex to isolate.

Instead of one hypothesis tested in one lab, we now have millions of models running in parallel, each offering probabilistic outcomes. In this context, understanding becomes less about binary truths and more about pattern recognition, systems thinking, and emergent coherence.

We are not abandoning the scientific method. We are expanding it.

Simulation is not a shortcut. It’s a second microscope. A second telescope. A second kind of vision that allows us to comprehend what no single reductionist experiment ever could.

This, too, is infrastructure. Not physical, but philosophical.

And perhaps, in a follow-up essay, I’ll explore this post-Popperian future more deeply. It feels like a necessary shift; one that science itself is already beginning to acknowledge.

The New Infrastructure of Imagination

We used to dream of flying cars and space hotels. But maybe the real breakthrough is something less visible and more powerful.

The ability to simulate the world at all scales, from atoms to cities, and to do so with intelligence, accuracy, and imagination.

That’s the infrastructure we’re building now.

And once it’s in place, everything else, energy, mobility, climate, medicine, may follow a new kind of logic. One where we don’t simply react to reality, but participate in shaping it.

Not as gods, but as stewards. With humility. With insight. And, most of all, with a clearer picture of what’s possible.

Image by Pete Linforth

Wonderful post 🌅🎸

LikeLiked by 1 person

Thank you! 😊

LikeLike